Je suis Chargé de Recherche en Interactions Humain-Machine (IHM) au sein de l'équipe-projet Loki du centre Inria Lille – Nord Europe depuis 2016.

Ma recherche actuelle porte sur la temporalité des interactions humain-machine, depuis les capacités physiologiques et cognitives de l'utilisateur jusqu'à la manière dont les systèmes interactifs sont conçus et construits – et sur la manière de les améliorer.

Cela implique de :

-

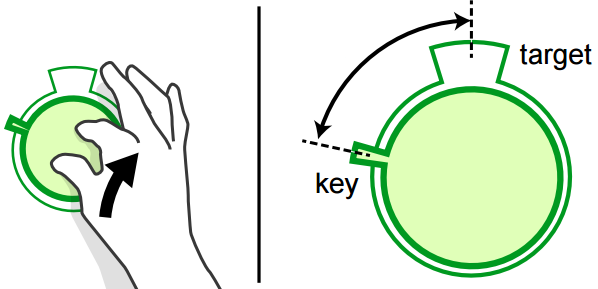

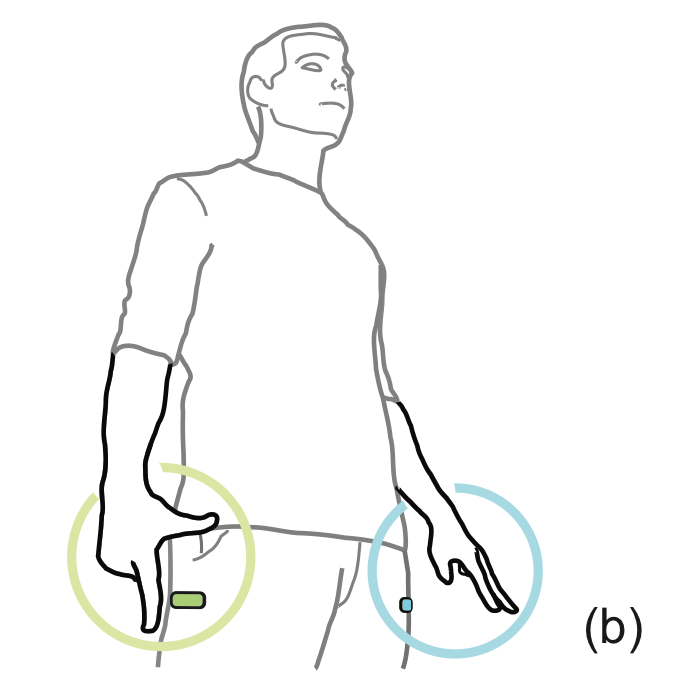

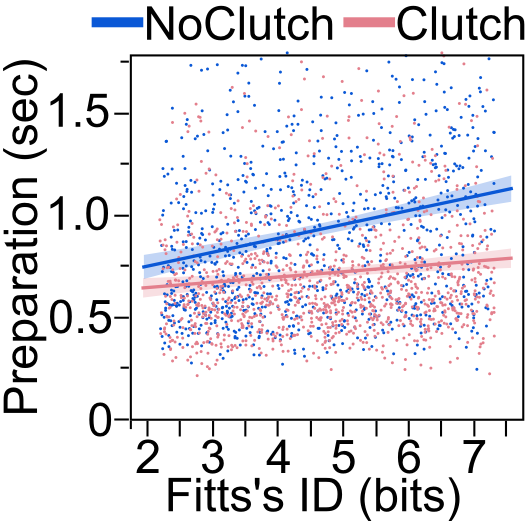

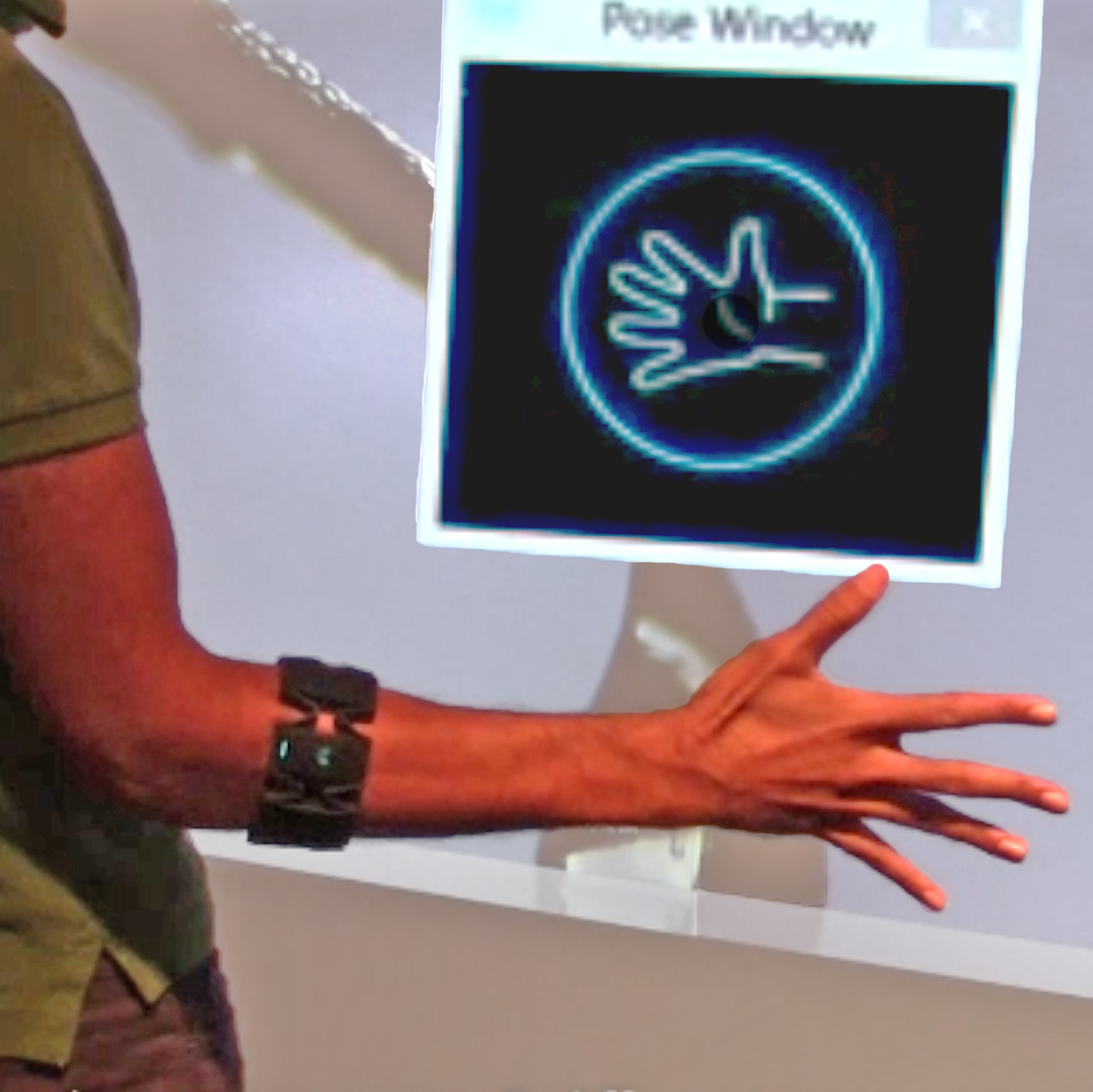

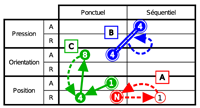

décrire et modéliser des phénomènes psychomoteurs en remettant parfois en question certaines présuppositions sur les capacités et les intérêts des utilisateurs, comme par exemple les interférences d'interaction, le “clutching”, ou encore notre étude des Marking Menus sans délais ;

-

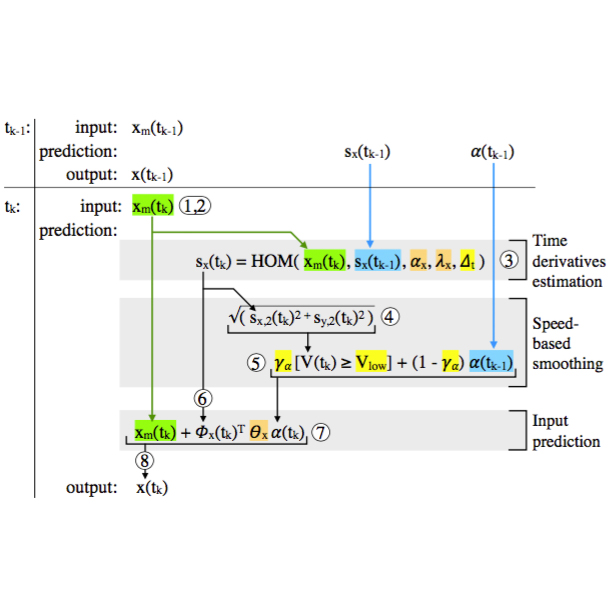

concevoir des mécanismes d'interaction qui appliquent ces connaissances pour améliorer l'utilisabilité et les performances des utilisateurs, par exemple en prévenant le jitter visuel qui peut se produire même quand chaque aspect du système fonctionne comme il le devrait, ou encore en compensant la latence de bout en bout ;

-

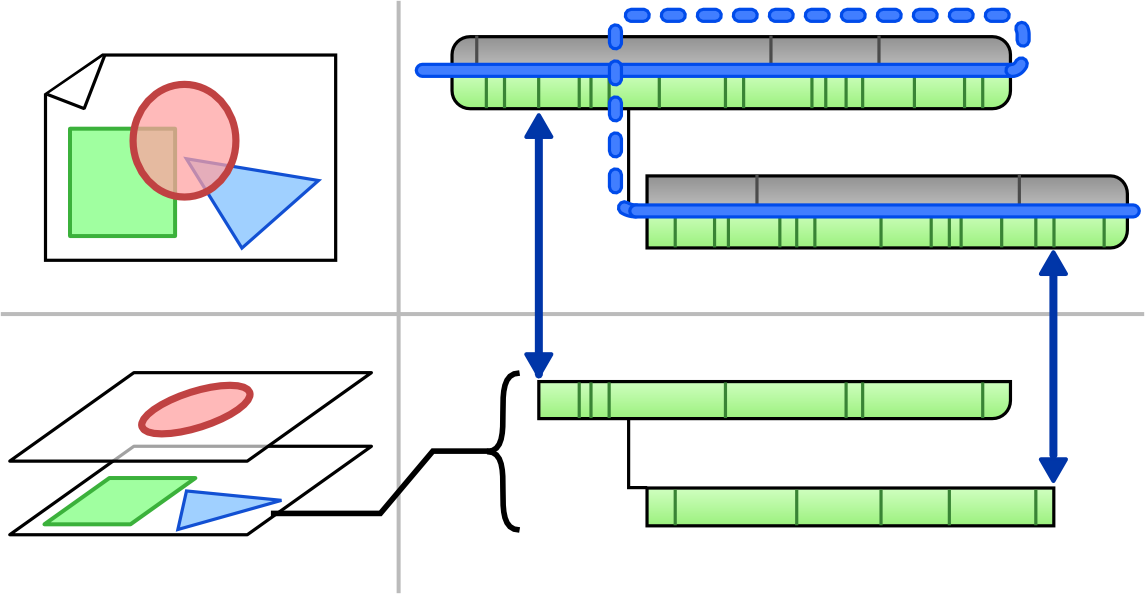

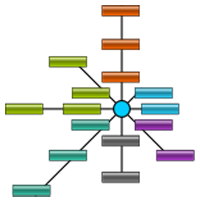

concevoir des systèmes interactifs qui permettent ou facilitent l'application de ces mécanismes, par exemple en implémentant notre modèle conceptuel des historiques d'interaction sous la forme d'une bibliothèque logicielle à part entière [en cours] pour construire des applications d'édition plus sûres et plus flexibles.

J'ai été le porteur du projet

Causality financé par l'

Agence Nationale de la Recherche (ANR), dans lequel j'applique ces principes aux thématiques du pointage et des historiques de commandes.

J'ai encadré les thèses de

Philippe Schmid et d'

Alice Loizeau sur ces sujets avec

Stéphane Huot.

J'ai une formation d'ingénieur en informatique, et ma “boîte à outils de recherche” emprunte à la psychologie expérimentale, à l'ingénierie des systèmes interactifs et au design d'interactions.

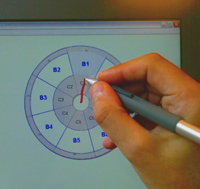

Durant ma thèse et les postdocs qui ont suivi, j'ai beaucoup travaillé sur l'interaction à distance avec de grands écrans, en me focalisant sur la conception et l'évaluation des techniques d'interaction pour le contrôle du curseur (e.g. [C3, J2, C6, C12]), la sélection de commandes [C4, C8] et la navigation virtuelle [C1], avec un intérêt spécifique pour l'exploration et l'exploitation de nouvelles* technologies de capture comme le suivi du mouvement (VICON [J2], Kinect [C4], LEAP [C8], ...) et les capteurs électromyographiques [C6].

Ces projets m'ont progressivement guidé vers des aspects plus fondamentaux de la conception et de l'implémentation des systèmes interactifs, en explorant et en répondant à des questions persistantes qui ne concernent plus seulement une plate-forme spécifique mais tous les aspects de notre expérience des systèmes interactifs.

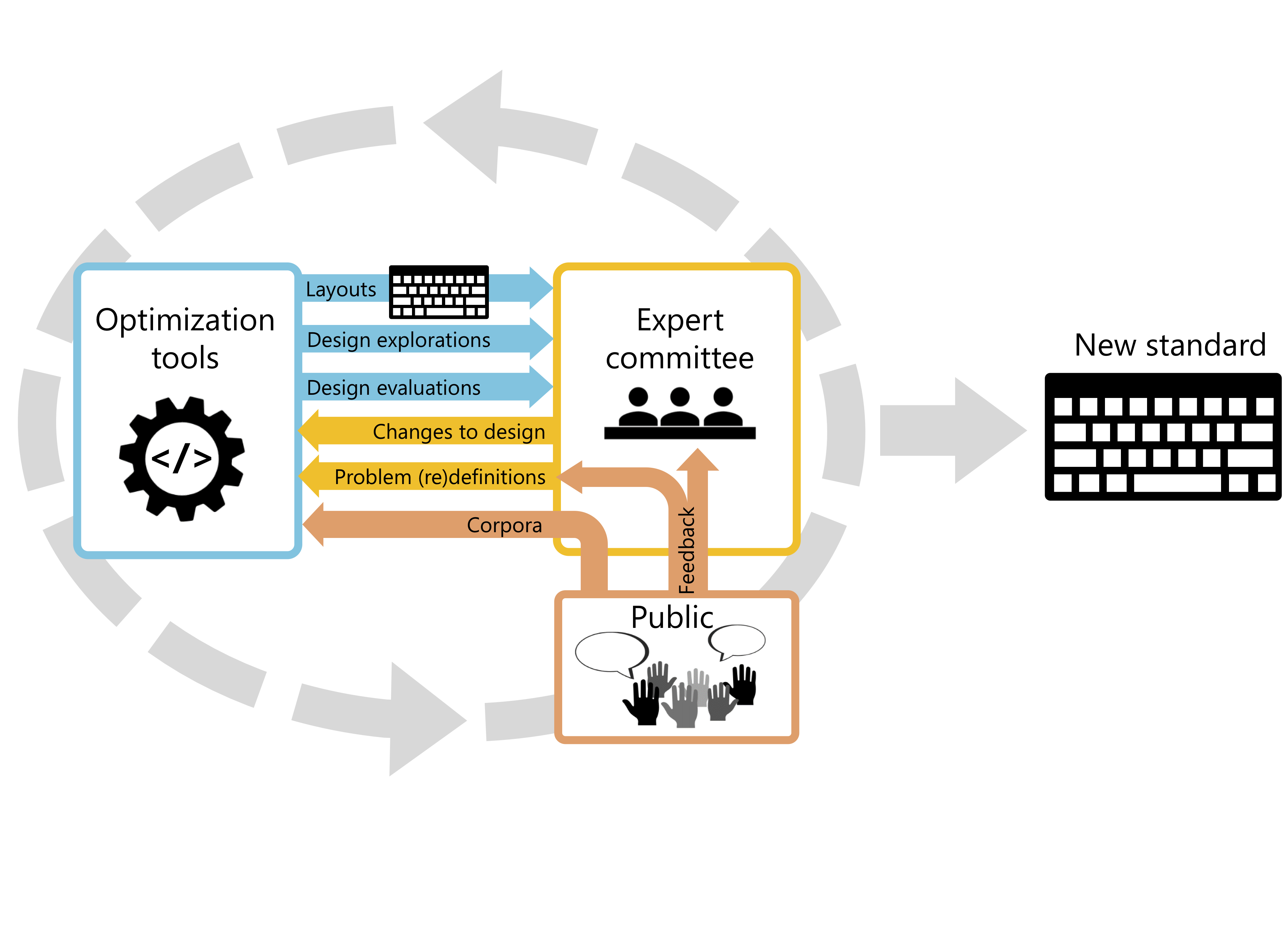

Par ailleurs, j'ai récemment contribué à la conception de la première norme pour la disposition du clavier français (voir [J3, R4, R5] et norme-azerty.fr pour plus d'informations sur ce processus), et j'ai co-écrit deux manuels de lycée sur un nouveau cours national d'introduction à la programmation [B1, B2].