I am a permanent researcher in Human-Computer Interaction (HCI) at the Loki lab at Inria Lille – Nord Europe (France) since 2016.

My ongoing research focuses on the temporality of Human-Computer interactions, from the user's physiological and cognitive capabilities to the way interactive systems are designed and built—and how to make them better.

That involves:

-

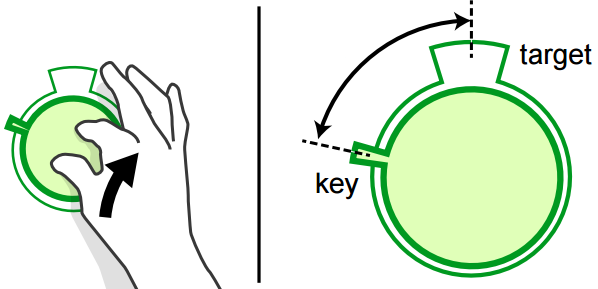

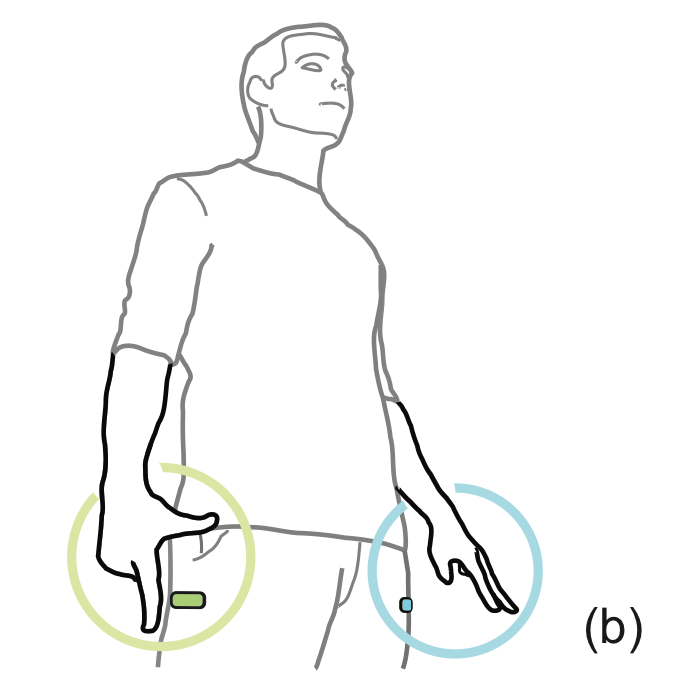

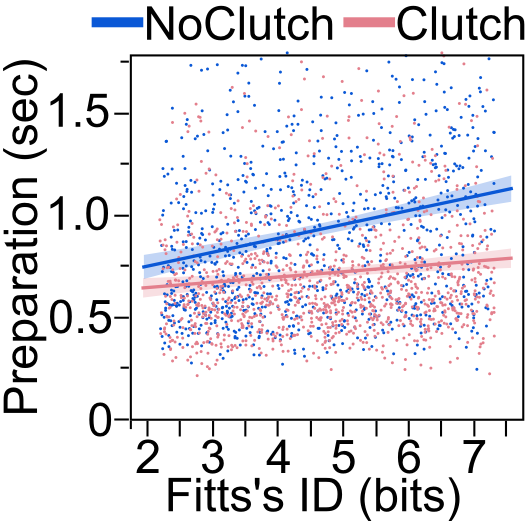

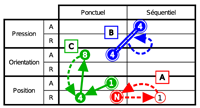

describing and modeling psycho-motor phenomena, sometimes challenging assumptions about the users' capabilities and best interests as with Interaction Interferences, clutching, or our Zero-Delay Marking Menu studies,

-

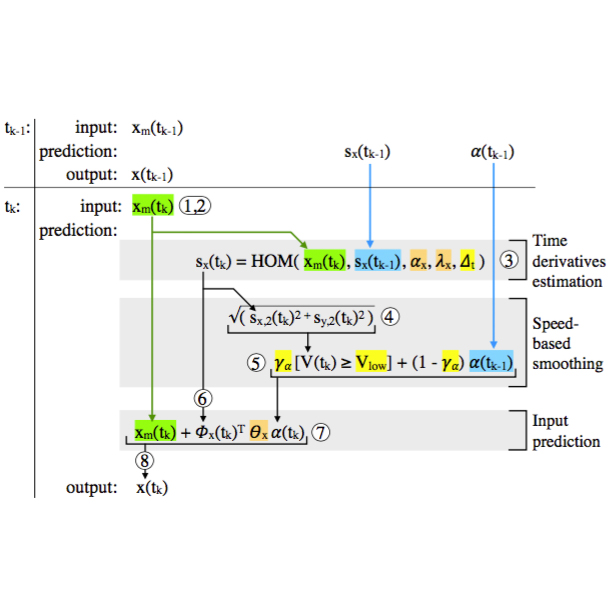

designing interaction mechanisms that apply these findings to improve usability and user performance, e.g. preventing visual jitter that occurs even though every aspect of a system works as it should, or compensating end-to-end latency,

-

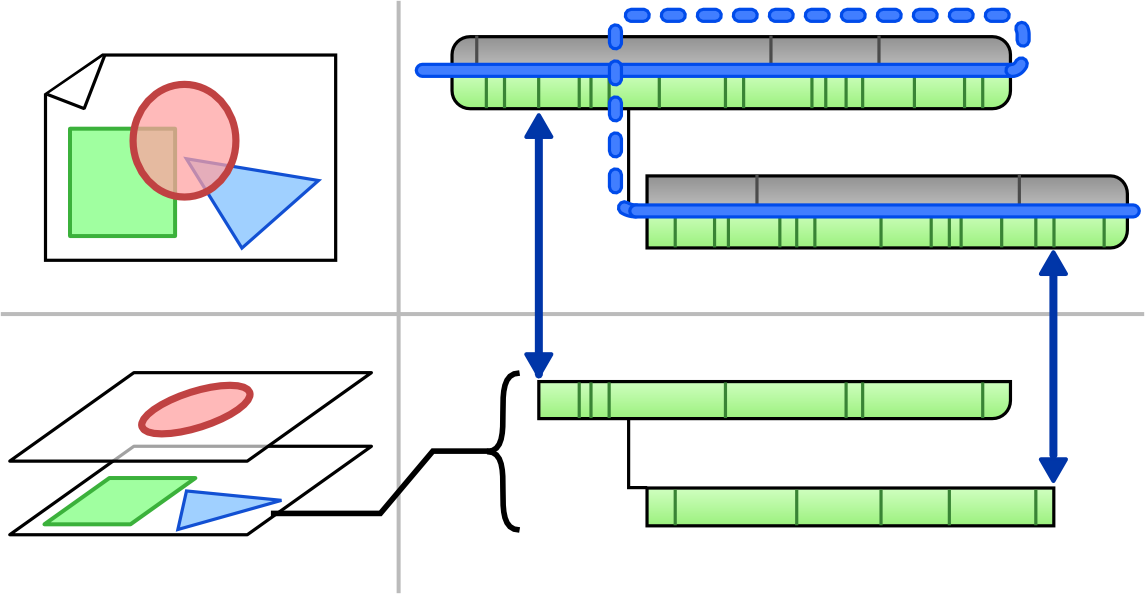

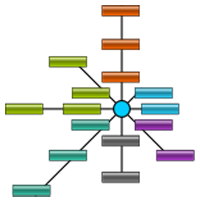

engineering interactive systems that allow or facilitate the application of these mechanisms, for instance implementing our conceptual model of interaction history as a full-fledged software library [ongoing] to build safer and more flexible editing applications.

I am the principal investigator of the

Causality project funded by the French

National Agency of Research (ANR), in which I apply these principles to cursor control and histories of command.

I currently co-advise the PhD theses of

Philippe Schmid and

Alice Loizeau on these topics with

Stéphane Huot.

My background is in software engineering, and my research toolbox borrows from experimental psychology, interactive systems engineering, and interaction design.

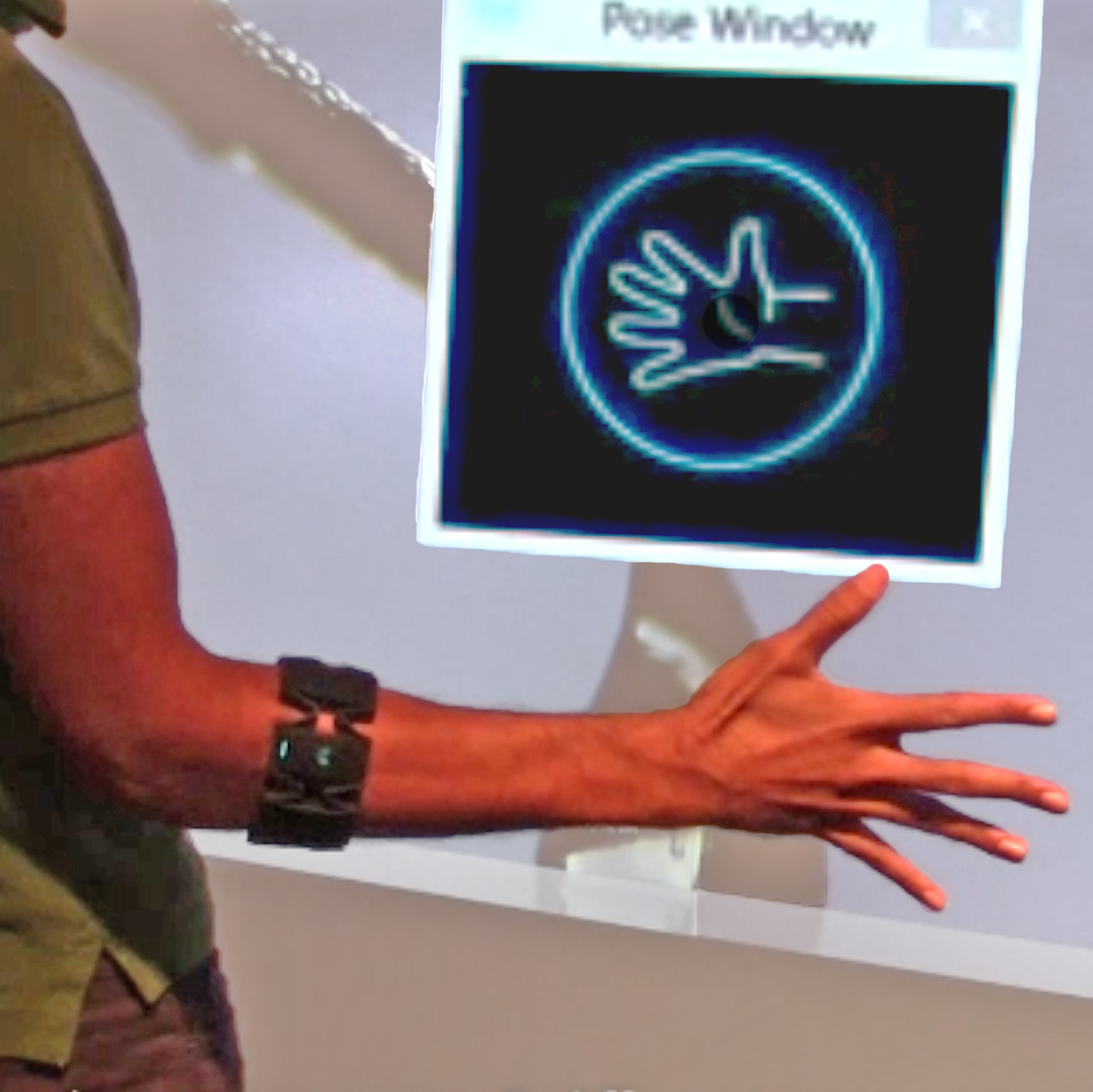

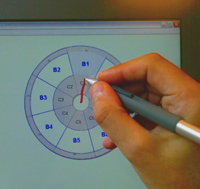

During my PhD and subsequent postdocs I have worked extensively on interacting at a distance with large displays, focusing on designing and evaluating interaction techniques for cursor control (e.g. [C3, J2, C6, C12]), command selection [C4, C8], and virtual navigation [C1], with an interest in exploring and leveraging new* sensing technology like motion tracking (VICON [J2], Kinect [C4], LEAP [C8], ...) and electromyographic sensors [C6].

These projects progressively guided me towards more fundamental aspects of interactive systems design and implementation, exploring and answering lingering questions that no longer concern only a specific platform but all aspects of our experience of interactive systems.

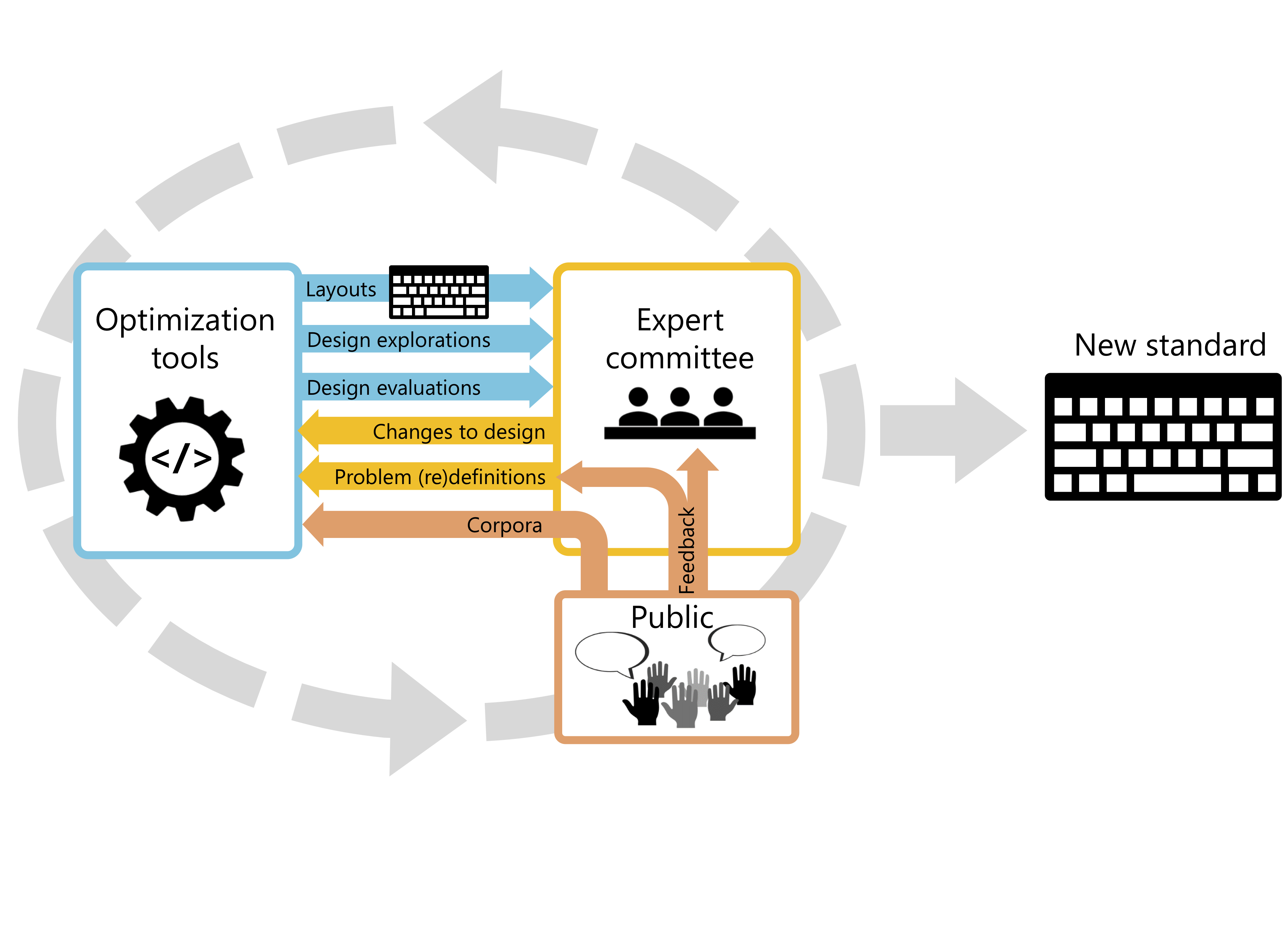

Meanwhile, I recently contributed to the design of the first standard for the French keyboard layout (see [J3, R4, R5] and norme-azerty.fr for more information on the process), and I co-authored two high school textbooks on a new nationwide course of introductory programming [B1, B2].